How to Set Up a K3S Cluster in 2025

Follow my step-by-step journey as I rebuild my K3s cluster from scratch using Ansible. Learn how to automate deployments, optimize performance, and create a scalable Kubernetes homelab.

It’s already been a year since my first Kubernetes journey. My initial clusters—where I started learning and understanding more about Kubernetes—are now all taken down. This time, I want to build a fully functional, highly available (HA) cluster.

Over the past weeks, I’ve done more research in Kubernetes communities, as well as on subreddits like [kubernetes], [homelab], and [selfhosted]. I discovered that one of the best ways to deploy a cluster these days is by following guides and content from Techno Tim, so I decided to write this blog and share my own approach.

Tip: If you’re new to K3s, subreddits like r/kubernetes and r/homelab can be great resources to learn from fellow enthusiasts.

What I Want to Achieve

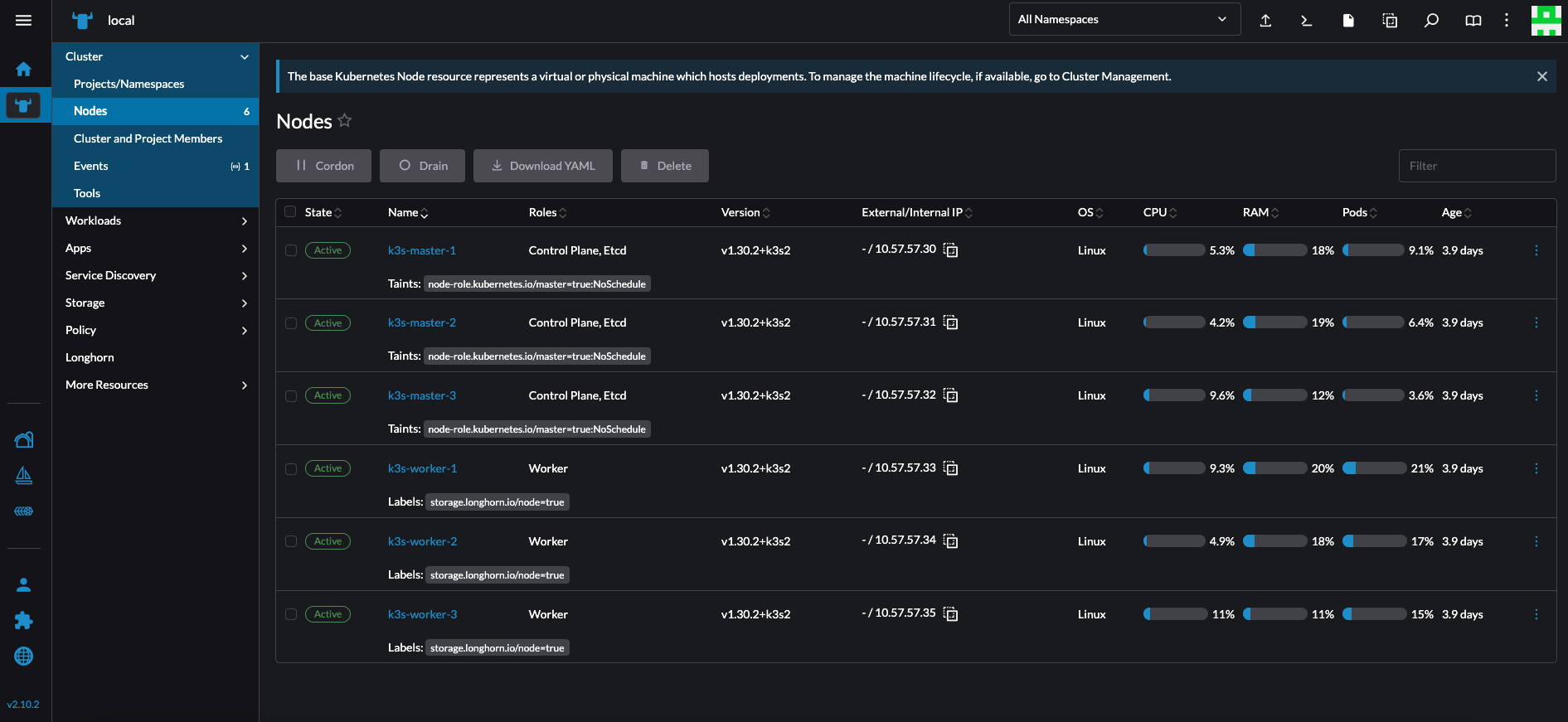

A fully organized HA cluster on my hardware, so if any of my machines go down, the cluster remains functional. Specifically:

- 1 x DELL R720 →

k3s-master-1andk3s-worker-1 - 1 x DELL Optiplex Micro 3050 →

k3s-master-2andk3s-worker-2 - 1 x DELL Optiplex Micro 3050 →

k3s-master-3andk3s-worker-3

How I Will Deploy

I will create six virtual machines (VMs) on a Proxmox cluster:

- 3 x Ubuntu 22.04 Master Nodes

- 3 x Ubuntu 22.04 Worker Nodes

The goal is to run K3s on these VMs to set up a solid Kubernetes environment with redundancy.

Chapter 1: Preparing DNS and IP Addresses

When setting up a Kubernetes cluster, DNS and IP management are crucial. Below is how I handle DHCP, static IP assignments, and DNS entries in my homelab environment.

DHCP Configuration

There are two possible scenarios for assigning IP addresses to your VMs:

Use IP addresses outside of your DHCP range

This method is often preferred, as your machines will keep their manually configured network settings even if your DHCP server goes down.DHCP Static Mappings

You can mapMAC -> IPin your network services to allocate IP addresses to VMs based on their MAC addresses.

Tip: If you choose the second scenario, make sure you document your static leases carefully. Proper documentation avoids conflicts and confusion later.

My Approach

I chose the first scenario, where I use IPs outside the DHCP range. This ensures my network remains stable if the DHCP service is unavailable.

- IP Range:

10.57.57.30/24→10.57.57.35/24for my VMs

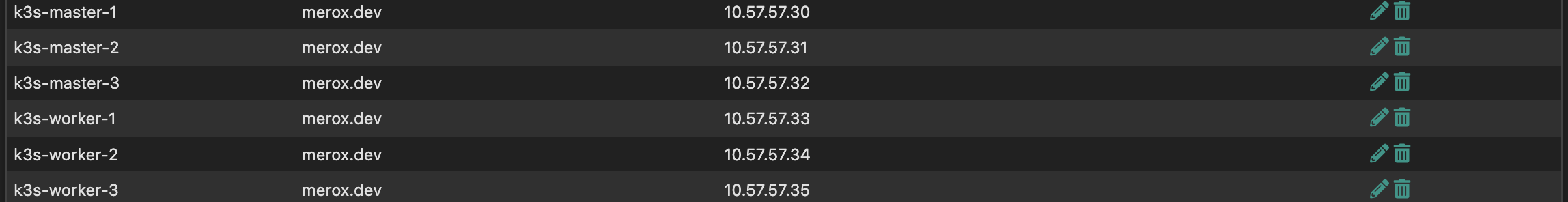

DNS Setup

I also set up a DNS entry in my Unbound service on pfSense to easily manage and access my machines. For instance, you can create an A record or similar DNS record type pointing to your VM’s IP address. Below is a simple example:

DNS configuration in pfSense Unbound

DNS configuration in pfSense Unbound

Chapter 2: Automated VM Deployment on Proxmox with Cloud-Init

To streamline the next steps, I’ve created a bash script that automates crucial parts of the process, including:

- Creating a Cloud-Init template

- Deploying multiple VMs with static IP addresses

- Destroying the VMs if needed

If you prefer an even more automated approach using tools like Packer or Terraform, I suggest checking out this related post: Homelab as Code and adapting it to your specific scenario. However, for this blog, I’ll demonstrate a simpler, more direct approach using the script below.

Warning: This script can create or destroy VMs. Use it carefully and always keep backups of critical data.

Prerequisites

- Make sure you have Proxmox up and running.

- You’ll need to place your SSH public key (e.g.,

/root/.ssh/id_rsa.pub) on the Proxmox server before running the script.

Script Overview

Option 1: Create Cloud-Init Template

- Downloads the Ubuntu Cloud image (currently Ubuntu 24.04, code-named “noble”)

- Creates a VM based on the Cloud-Init image

- Converts it into a template

Option 2: Deploy VMs

- Clones the Cloud-Init template to create the desired number of VMs

- Configures IP addressing, gateway, DNS, search domain, SSH key, etc.

- Adjusts CPU, RAM, and disk size to fit your needs

Option 3: Destroy VMs

- Stops and removes VMs created by this script

During the VM creation process, you’ll be prompted to enter the VM name for each instance (e.g., k3s-master-1, k3s-master-2, etc.).

Tip: To fully automate naming, you could edit the script to increment VM names automatically. However, prompting ensures you can organize VMs with custom naming.

The Bash Script

Below is the full script. Feel free to customize it based on your storage, networking, and naming preferences.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

#!/bin/bash

# Function to get user input with a default value

get_input() {

local prompt=$1

local default=$2

local input

read -p "$prompt [$default]: " input

echo "${input:-$default}"

}

# Ask the user whether they want to create a template, deploy or destroy VMs

echo "Select an option:"

echo "1) Create Cloud-Init Template"

echo "2) Deploy VMs"

echo "3) Destroy VMs"

read -p "Enter your choice (1, 2, or 3): " ACTION

if [[ "$ACTION" != "1" && "$ACTION" != "2" && "$ACTION" != "3" ]]; then

echo "❌ Invalid choice. Please run the script again and select 1, 2, or 3."

exit 1

fi

# === OPTION 1: CREATE CLOUD-INIT TEMPLATE ===

if [[ "$ACTION" == "1" ]]; then

TEMPLATE_ID=$(get_input "Enter the template VM ID" "300")

STORAGE=$(get_input "Enter the storage name" "local")

TEMPLATE_NAME=$(get_input "Enter the template name" "ubuntu-cloud")

IMG_URL="https://cloud-images.ubuntu.com/noble/current/noble-server-cloudimg-amd64.img"

IMG_FILE="/root/noble-server-cloudimg-amd64.img"

echo "📥 Downloading Ubuntu Cloud image..."

cd /root

wget -O $IMG_FILE $IMG_URL || { echo "❌ Failed to download the image"; exit 1; }

echo "🖥️ Creating VM $TEMPLATE_ID..."

qm create $TEMPLATE_ID --memory 2048 --cores 2 --name $TEMPLATE_NAME --net0 virtio,bridge=vmbr0

echo "💾 Importing disk to storage ($STORAGE)..."

qm disk import $TEMPLATE_ID $IMG_FILE $STORAGE || { echo "❌ Failed to import disk"; exit 1; }

echo "🔗 Attaching disk..."

qm set $TEMPLATE_ID --scsihw virtio-scsi-pci --scsi0 $STORAGE:vm-$TEMPLATE_ID-disk-0

echo "☁️ Adding Cloud-Init drive..."

qm set $TEMPLATE_ID --ide2 $STORAGE:cloudinit

echo "🛠️ Configuring boot settings..."

qm set $TEMPLATE_ID --boot c --bootdisk scsi0

echo "🖧 Adding serial console..."

qm set $TEMPLATE_ID --serial0 socket --vga serial0

echo "📌 Converting VM to template..."

qm template $TEMPLATE_ID

echo "✅ Cloud-Init Template created successfully!"

exit 0

fi

# === OPTION 2: DEPLOY VMs ===

if [[ "$ACTION" == "2" ]]; then

TEMPLATE_ID=$(get_input "Enter the template VM ID" "300")

START_ID=$(get_input "Enter the starting VM ID" "301")

NUM_VMS=$(get_input "Enter the number of VMs to deploy" "6")

STORAGE=$(get_input "Enter the storage name" "dataz2")

IP_PREFIX=$(get_input "Enter the IP prefix (e.g., 10.57.57.)" "10.57.57.")

IP_START=$(get_input "Enter the starting IP last octet" "30")

GATEWAY=$(get_input "Enter the gateway IP" "10.57.57.1")

DNS_SERVERS=$(get_input "Enter the DNS servers (space-separated)" "8.8.8.8 1.1.1.1")

DOMAIN_SEARCH=$(get_input "Enter the search domain" "merox.dev")

DISK_SIZE=$(get_input "Enter the disk size (e.g., 100G)" "100G")

RAM_SIZE=$(get_input "Enter the RAM size in MB" "16384")

CPU_CORES=$(get_input "Enter the number of CPU cores" "4")

CPU_SOCKETS=$(get_input "Enter the number of CPU sockets" "4")

SSH_KEY_PATH=$(get_input "Enter the SSH public key file path" "/root/.ssh/id_rsa.pub")

if [[ ! -f "$SSH_KEY_PATH" ]]; then

echo "❌ Error: SSH key file not found at $SSH_KEY_PATH"

exit 1

fi

for i in $(seq 0 $((NUM_VMS - 1))); do

VM_ID=$((START_ID + i))

IP="$IP_PREFIX$((IP_START + i))/24"

VM_NAME=$(get_input "Enter the name for VM $VM_ID" "ubuntu-vm-$((i+1))")

echo "🔹 Creating VM: $VM_ID (Name: $VM_NAME, IP: $IP)"

if qm status $VM_ID &>/dev/null; then

echo "⚠️ VM $VM_ID already exists, removing..."

qm stop $VM_ID &>/dev/null

qm destroy $VM_ID

fi

if ! qm clone $TEMPLATE_ID $VM_ID --full --name $VM_NAME --storage $STORAGE; then

echo "❌ Failed to clone VM $VM_ID, skipping..."

continue

fi

qm set $VM_ID --memory $RAM_SIZE \

--cores $CPU_CORES \

--sockets $CPU_SOCKETS \

--cpu host \

--serial0 socket \

--vga serial0 \

--ipconfig0 ip=$IP,gw=$GATEWAY \

--nameserver "$DNS_SERVERS" \

--searchdomain "$DOMAIN_SEARCH" \

--sshkey "$SSH_KEY_PATH"

qm set $VM_ID --delete ide2 || true

qm set $VM_ID --ide2 $STORAGE:cloudinit,media=cdrom

qm cloudinit update $VM_ID

echo "🔄 Resizing disk to $DISK_SIZE..."

qm resize $VM_ID scsi0 +$DISK_SIZE

qm start $VM_ID

echo "✅ VM $VM_ID ($VM_NAME) created and started!"

done

exit 0

fi

# === OPTION 3: DESTROY VMs ===

if [[ "$ACTION" == "3" ]]; then

START_ID=$(get_input "Enter the starting VM ID to delete" "301")

NUM_VMS=$(get_input "Enter the number of VMs to delete" "6")

echo "⚠️ Destroying VMs from $START_ID to $((START_ID + NUM_VMS - 1))..."

for i in $(seq 0 $((NUM_VMS - 1))); do

VM_ID=$((START_ID + i))

if qm status $VM_ID &>/dev/null; then

echo "🛑 Stopping and destroying VM $VM_ID..."

qm stop $VM_ID &>/dev/null

qm destroy $VM_ID

else

echo "ℹ️ VM $VM_ID does not exist. Skipping..."

fi

done

echo "✅ Specified VMs have been destroyed."

exit 0

fi

Verifying Your Deployment

After running the script under Option 2, you should see your new VMs listed in the Proxmox web interface. You can now log in via SSH from the machine that holds the corresponding private key:

1

ssh ubuntu@k3s-master-01

Note: Adjust the hostname or IP as configured during the script prompts.

Chapter 3: Installing K3s with Ansible

This chapter will guide you through setting up K3s using Ansible on your Proxmox-based VMs. Ansible helps automate the process across multiple nodes, making the deployment faster and more reliable.

Prerequisites

Ensure Ansible is installed on your management machine (Debian/Ubuntu or macOS):

Debian/Ubuntu:

1

sudo apt update && sudo apt install -y ansible

macOS:

1

brew install ansibleClone the k3s-ansible repository

We will use Techno Tim’s k3s-ansible repository, but in this guide, we’ll use a forked version:

1

git clone https://github.com/meroxdotdev/k3s-ansible

Pre-Deployment Configuration

- Set up the Ansible environment:

1

2

3

4

cd k3s-ansible

cp ansible.example.cfg ansible.cfg

ansible-galaxy install -r ./collections/requirements.yml

cp -R inventory/sample inventory/my-cluster

Edit

inventory/my-cluster/hosts.iniModify this file to match your cluster’s IP addresses. Example:

1

2

3

4

5

6

7

8

9

10

11

12

13

[master]

10.57.57.30

10.57.57.31

10.57.57.32

[node]

10.57.57.33

10.57.57.34

10.57.57.35

[k3s_cluster:children]

master

node

Edit

inventory/my-cluster/group_vars/all.ymlSome critical fields to modify:

ansible_user: Default VM user is

ubuntuwith sudo privileges.system_timezone: Set to your local timezone (e.g.,

Europe/Bucharest).Networking (

Calicovs.Flannel): Comment out#flannel_iface: eth0and usecalico_iface: "eth0"for better network policies. Flannel is the simpler alternative if you prefer an easier setup.apiserver_endpoint:

10.57.57.100- Ensure this is an unused IP in your local network. It serves as the VIP (Virtual IP) for the k3s control plane.k3s_token: Use any alphanumeric string.

metal_lb_ip_range:

10.57.57.80-10.57.57.90- The IP belongs to your local network (LAN), is not already in use by other network services, and is outside your DHCP pool range to avoid conflicts. This setup enables exposing K3s container services to your network, similar to how Docker ports are exposed to their host IP.

Before running the next command, ensure SSH key authentication is set up between your management machine and all deployed VMs.

Deploy the Cluster

Run the following command to deploy the cluster:

1

ansible-playbook ./site.yml -i ./inventory/my-cluster/hosts.ini

Once the playbook execution completes, you can verify the cluster’s status:

1

2

3

4

5

6

7

8

9

# Copy the kubeconfig file from the first master node

scp [email protected]:~/.kube/config .

# Move it to the correct location

mkdir -p ~/.kube

mv config ~/.kube/

# Check if the cluster nodes are properly registered

kubectl get nodes

If the setup was successful, kubectl get nodes should display the cluster’s nodes and their statuses.

Chapter 4: K3S Apps Deployment

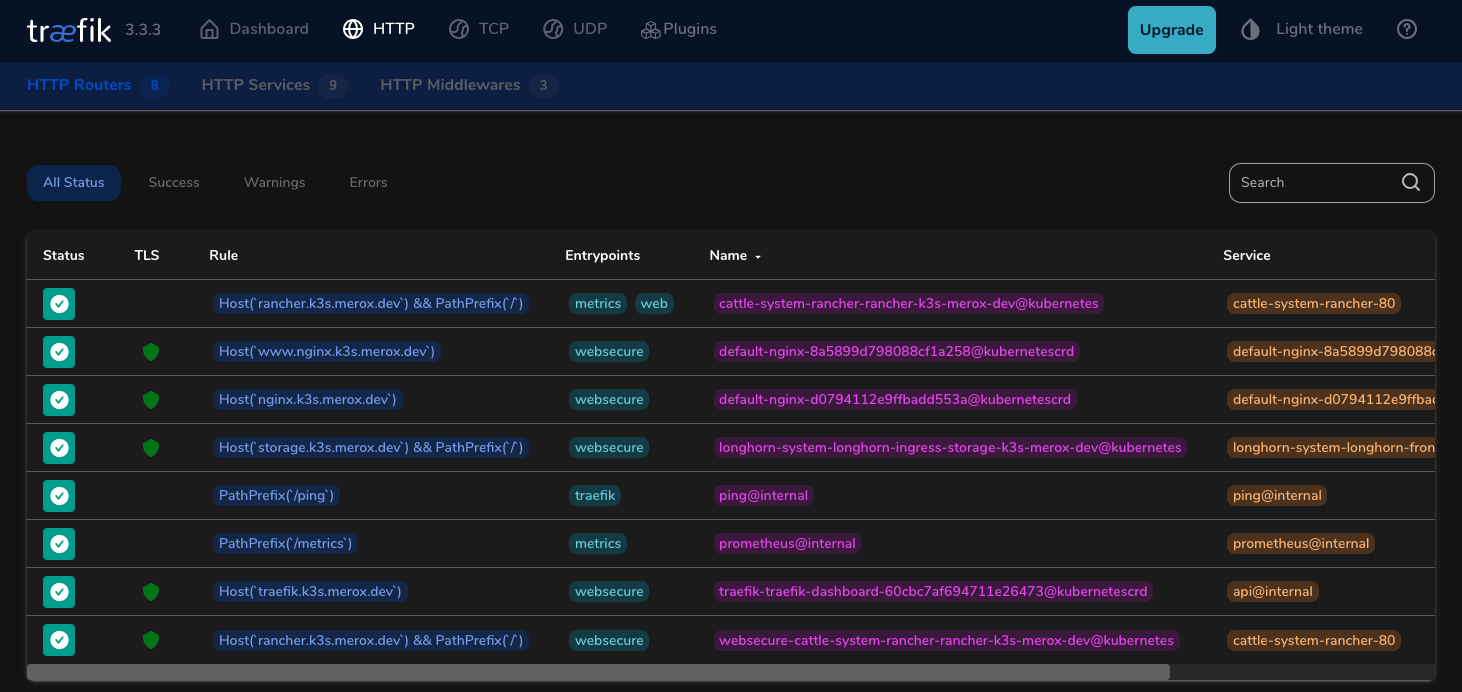

Deploying Traefik

Install Helm Package Manager for Kubernetes

1

2

3

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

Create Namespace for Traefik

1

kubectl create namespace traefik

Add Helm Repository and Update

1

2

helm repo add traefik https://helm.traefik.io/traefik

helm repo update

Clone TechnoTim Launchpad Repository

1

git clone https://github.com/techno-tim/launchpad

Configure values.yaml for Traefik

Open the launchpad/kubernetes/traefik-cert-manager/ directory and check values.yaml. Most configurations are already set; you only need to specify the IP for the LoadBalancer service. Choose an IP from the MetalLB range defined in your setup.

Install Traefik Using Helm

1

helm install --namespace=traefik traefik traefik/traefik --values=values.yaml

Verify Deployment

1

kubectl get svc --all-namespaces -o wide

Expected output:

1

2

3

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

calico-system calico-typha ClusterIP 10.43.80.131 <none> 5473/TCP 2d20h k8s-app=calico-typha

traefik traefik LoadBalancer 10.43.185.67 10.57.57.80 80:32195/TCP,443:31598/TCP,443:31598/UDP 53s app.kubernetes.io/instance=traefik,app.kubernetes.io/name=traefik

Apply Middleware

1

2

kubectl apply -f default-headers.yaml

kubectl get middleware

Expected output:

1

2

NAME AGE

default-headers 4s

Deploying Traefik Dashboard

Install htpasswd

1

2

sudo apt-get update

sudo apt-get install apache2-utils

Generate a Base64-Encoded Credential

1

htpasswd -nb merox password | openssl base64

Copy the generated password hash and replace abc123== with it in dashboard/secret-dashboard.yaml:

1

2

3

4

5

6

7

8

9

---

apiVersion: v1

kind: Secret

metadata:

name: traefik-dashboard-auth

namespace: traefik

type: Opaque

data:

users: abc123==

Apply secret:

1

kubectl apply -f secret-dashboard.yaml

Configure DNS Resolver

Ensure that your DNS server points to the MetalLB IP specified in values.yaml.

Example entry for pfSense DNS Resolver:

dashboard/ingress.yaml:

1

2

routes:

- match: Host(`traefik.k3s.your.domain`)

Apply Kubernetes Resources

From traefik/dashboard folder:

1

2

3

4

kubectl apply -f secret-dashboard.yaml

kubectl get secrets --namespace traefik

kubectl apply -f middleware.yaml

kubectl apply -f ingress.yaml

At this point, you should be able to access the DNS entry you created. However, it will use a self-signed SSL certificate generated by Traefik. In the next steps, we will configure Let’s Encrypt certificates using Cloudflare as the provider.

Deploying Cert-Manager

From traefik-cert-manager/cert-manager folder:

Add Jetstack Helm Repository

1

2

helm repo add jetstack https://charts.jetstack.io

helm repo update

Create Namespace for Cert-Manager

1

kubectl create namespace cert-manager

Apply CRDs (Custom Resource Definitions)

Note: Ensure you use the latest version of Cert-Manager.

1

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.17.0/cert-manager.crds.yaml

Install Cert-Manager Using Helm

1

helm install cert-manager jetstack/cert-manager --namespace cert-manager --values=values.yaml --version v1.17.0

Apply Cloudflare API Secret

Make sure you generate the correct API token if using Cloudflare (use an API Token, not a global key).

1

kubectl apply -f issuers/secret-cf-token.yaml

Deploy Production Certificates

Fields to be edited before:

issuers/letsencrypt-production.yaml: email, dnsZones

certificates/production/your-domain-com.yaml: name, secretName, commonName, dnsNames

1

2

3

kubectl apply -f values.yaml

kubectl apply -f issuers/letsencrypt-production.yaml

kubectl apply -f certificates/production/your-domain-com.yaml

Verify Logs and Challenges

1

2

kubectl logs -n cert-manager -f cert-manager-(your-instance-name)

kubectl get challenges

With these steps completed, your K3s cluster now runs Traefik as an ingress controller, supports HTTPS with Let’s Encrypt, and manages certificates automatically. This setup ensures secure traffic routing and efficient load balancing for your Kubernetes applications.

Deploying Rancher

Add Rancher Helm Repository and Create Namespace

1

2

helm repo add rancher-latest https://releases.rancher.com/server-charts/stable

kubectl create namespace cattle-system

Since Traefik is already deployed, Rancher will utilize it for ingress. Deploy Rancher with Helm:

1

2

3

4

5

helm install rancher rancher-stable/rancher \

--namespace cattle-system \

--set hostname=rancher.k3s.your.domain \

--set tls=external \

--set replicas=3

Create Ingress for Rancher

Create an ingress.yml file with the following configuration:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

apiVersion: traefik.io/v1alpha1

kind: IngressRoute

metadata:

name: rancher

namespace: cattle-system

spec:

entryPoints:

- websecure

routes:

- match: Host(`rancher.k3s.your.domain`)

kind: Rule

services:

- name: rancher

port: 443

middlewares:

- name: default-headers

tls:

secretName: k3s-your-domain-tls

Apply the ingress configuration:

1

kubectl apply -f ingress.yml

Now, you should be able to manage your cluster from https://rancher.k3s.your.domain.

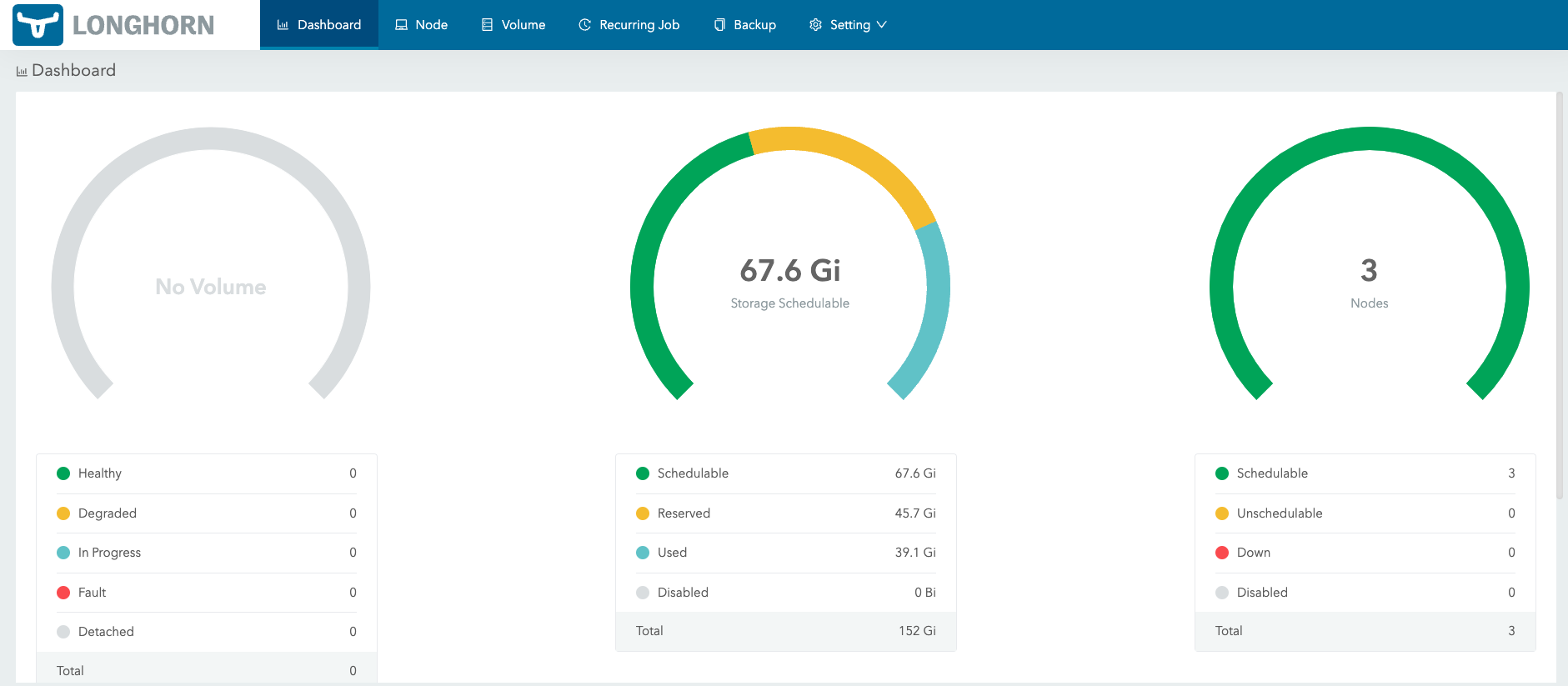

Deploying Longhorn

If you want to use cloud-ready drive shared storage, follow these steps:

Install Required Packages

Only on the VMs you want to deploy longhorn:

1

sudo apt update && sudo apt install -y open-iscsi nfs-common

Enable iSCSI

1

2

sudo systemctl enable iscsid

sudo systemctl start iscsid

Add Longhorn Label on Nodes

A minimum of three nodes are required for High Availability. In this setup, we will use three worker nodes:

1

2

3

kubectl label node k3s-worker-1 storage.longhorn.io/node=true

kubectl label node k3s-worker-2 storage.longhorn.io/node=true

kubectl label node k3s-worker-3 storage.longhorn.io/node=true

Deploy Longhorn

Modified to use storage.longhorn.io/node=true label:

1

kubectl apply -f https://raw.githubusercontent.com/meroxdotdev/merox.docs/refs/heads/master/K3S/cluster-deployment/longhorn.yaml

Verify Deployment

1

kubectl get pods --namespace longhorn-system --watch

Print Confirmation

1

2

kubectl get nodes

kubectl get svc -n longhorn-system

Exposing Longhorn with Traefik

Create Middleware Configuration

Create a middleware.yml file:

1

2

3

4

5

6

7

8

9

apiVersion: traefik.io/v1alpha1

kind: Middleware

metadata:

name: longhorn-headers

namespace: longhorn-system

spec:

headers:

customRequestHeaders:

X-Forwarded-Proto: "https"

Setup Ingress

Create an ingress.yml file:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: longhorn-ingress

namespace: longhorn-system

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: websecure

traefik.ingress.kubernetes.io/router.tls: "true"

traefik.ingress.kubernetes.io/router.middlewares: longhorn-system-longhorn-headers@kubernetescrd

spec:

rules:

- host: storage.k3s.your.domain

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: longhorn-frontend

port:

number: 80

tls:

- hosts:

- storage.k3s.your.domain

secretName: k3s-your-domain-tls

Additional Resources

Using NFS Storage

If you want to use NFS storage in your cluster, follow this guide: Merox Docs - NFS Storage Guide

Monitoring Your Cluster

A great monitoring tool for your cluster is Netdata.

You can also try deploying Prometheus and Grafana from Rancher. However, if you don’t fine-tune the setup, you might notice a high resource usage due to the large number of queries processed by Prometheus.

Continuous Deployment with ArgoCD

ArgoCD is an excellent tool for continuous deployment. You can find more details here.

Upgrading Your Cluster

If you need to upgrade your cluster, I put some notes here: How to Upgrade K3s.

Final Thoughts

When I first deployed a K3s/RKE2 cluster (about a year ago), I struggled to find a single source of documentation that covered everything needed for at least a homelab, if not even for production use. Unfortunately, I couldn’t find anything comprehensive, so I decided to write this article to consolidate all the necessary information in one place.

If this guide helped you and you’d like to see more information added, please leave a comment, and I will do my best to update this post.

How Have You Deployed Your Clusters?

Let me know in the comments!